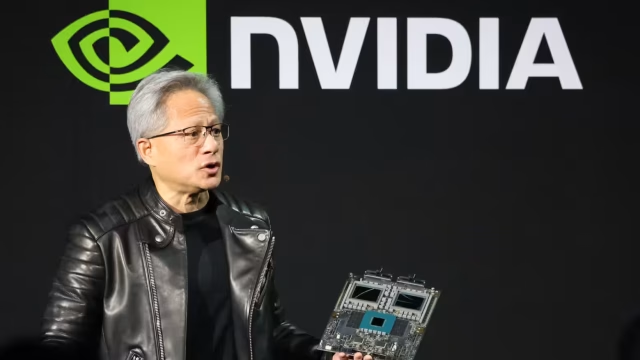

Nvidia Chief Executive Officer Jensen Huang said on Monday that the company’s next generation of chips has entered full production, highlighting a major leap in performance for artificial intelligence workloads. According to Huang, the new processors can deliver up to five times more AI computing power than Nvidia’s previous chips when running chatbots and other AI applications.

Speaking at the Consumer Electronics Show in Las Vegas, Huang shared fresh details about the upcoming chip lineup, which is scheduled to launch later this year. Nvidia executives said the chips are already being tested in the company’s laboratories by AI firms, as competition intensifies from both traditional rivals and major customers developing their own processors.

At the center of the announcement was Nvidia’s Vera Rubin platform, which combines six separate Nvidia chips. The flagship server is expected to feature 72 graphics processing units and 36 newly designed central processing units. Huang demonstrated how the system can be scaled into large “pods” containing more than 1,000 Rubin chips, potentially boosting the efficiency of generating AI “tokens” by up to tenfold.

To achieve these performance gains, Huang explained that the Rubin chips rely on a proprietary data format that Nvidia hopes will be adopted more broadly across the industry. He noted that the sharp improvement was achieved despite the chips containing only about 1.6 times more transistors than earlier designs.

While Nvidia continues to dominate the market for training AI models, competition is growing in the deployment of AI services to end users. Rivals include established chipmakers such as Advanced Micro Devices, as well as large customers like Alphabet’s Google, which is increasingly developing its own AI hardware.

A significant portion of Huang’s keynote focused on how the new chips improve performance for real-time AI applications. Nvidia also introduced a new storage layer called “context memory storage,” designed to help chatbots respond more quickly to long and complex queries.

The company also unveiled a new generation of networking switches featuring co-packaged optics, a technology critical for linking thousands of machines into a single system. These products place Nvidia in direct competition with networking specialists such as Broadcom and Cisco Systems.

Nvidia said cloud provider CoreWeave will be among the first customers to deploy the Vera Rubin systems. The company also expects major cloud players, including Microsoft, Oracle, Amazon, and Alphabet, to adopt the platform.

Beyond data centers, Huang highlighted new software aimed at autonomous vehicles. Nvidia plans to release Alpamayo, a decision-making system for self-driving cars, along with the data used to train it. Huang said open-sourcing both the models and the data is essential for building trust and enabling automakers to properly evaluate the technology.

Last month, Nvidia also acquired talent and chip technology from startup Groq, including executives who previously helped Google design its own AI processors. Huang said the deal would not affect Nvidia’s core business but could lead to new products that expand its overall lineup.

Nvidia is also keen to demonstrate that its latest chips outperform older models such as the H200, which U.S. President Donald Trump has allowed to be sold to China. According to Reuters, demand for the H200 remains strong in China, raising concerns among U.S. policymakers.

Huang told analysts that interest in the H200 chip is robust, while Chief Financial Officer Colette Kress said Nvidia has applied for export licenses and is awaiting approval from U.S. and other authorities before shipping the chips to China.